Software Development in the Age of AI

AI coding agents have been around for a while and I have read about and heard countless claims that AI coding agents will replace developers soon. Whether or not that's true, I know one thing is sure: a lot of developers today use an AI coding agent when building a feature or resolving an issue. Only recently, I started using Copilot in VS Code to augment my development workflow. Today I’m sharing this journey and some thoughts on working with AI as a coding teammate in a collaborative environment.

AI-assisted development

There are a lot of things AI can do right and it makes sense to use them in that way to save time and resources. Over the past months of using Copilot, I have found that AI performs very well with tasks that are either small, documented, common, or a combination of the three. Scaffolding a NextJS app with TypeScript, ESLint, Tailwind CSS, and @shadcn/ui is a common and documented process—AI does well with this. Updating a project's README is a small task involving natural language that LLMs are known to do well on. Other tasks that AI can assist with are:

- Brainstorming and planning architectural solutions

- Automating tests or test generation

- Optimizing pieces of code

- Recommending possible fixes to issues

- Static code analysis

Software development, however, is not always about tackling small, documented, and common tasks. So there are quite a few things AI can't do right (or at all). I find that when asking an AI coding agent to work on an agile scrum user story typically written in the way stories I have worked on before are written (with just enough context to be achievable, only the important context is written explicitly), it often falls short by hallucinating rules and patterns that weren't there in the first place.

In newer, early stage projects the "unwritten" rules and coding practices in place are not enough of a pattern yet for the AI to correctly determine that they exist, so it invents its own rules that may or may not be common in other projects. As an example, I had asked Copilot to create a component and add component stories to Storybook. The Storybook categorization I had in place were Base Components for atomic components and @shadcn/ui primitives, and UI Components for bigger, standalone components used in the project. I don’t have enough component stories in the UI Components category, and so Copilot has categorized this newly created component in a more general Components category.

They say AI failure is more often context management failure than anything, and in this case it may very well be the truth. The AI coding agent lacked proper context about the specific project it was working on, and the instructions in the ticket that I gave to it were not sufficiently clear in order for it to produce the desired output. As a software engineer, I needed to bridge that gap.

I have heard of the term prompt engineering: the process of crafting effective instructions (prompts) to guide generative AI models to produce desired outputs. It is most useful for one-off prompts and asks. But there is also a deeper, wider, more technical version called context engineering: where one designs a system that determines what information an AI model receives before it generates a response. It is, summarily, providing context to an AI model so it can generate the desired output. This is what I set out to do next.

Context engineering in practice

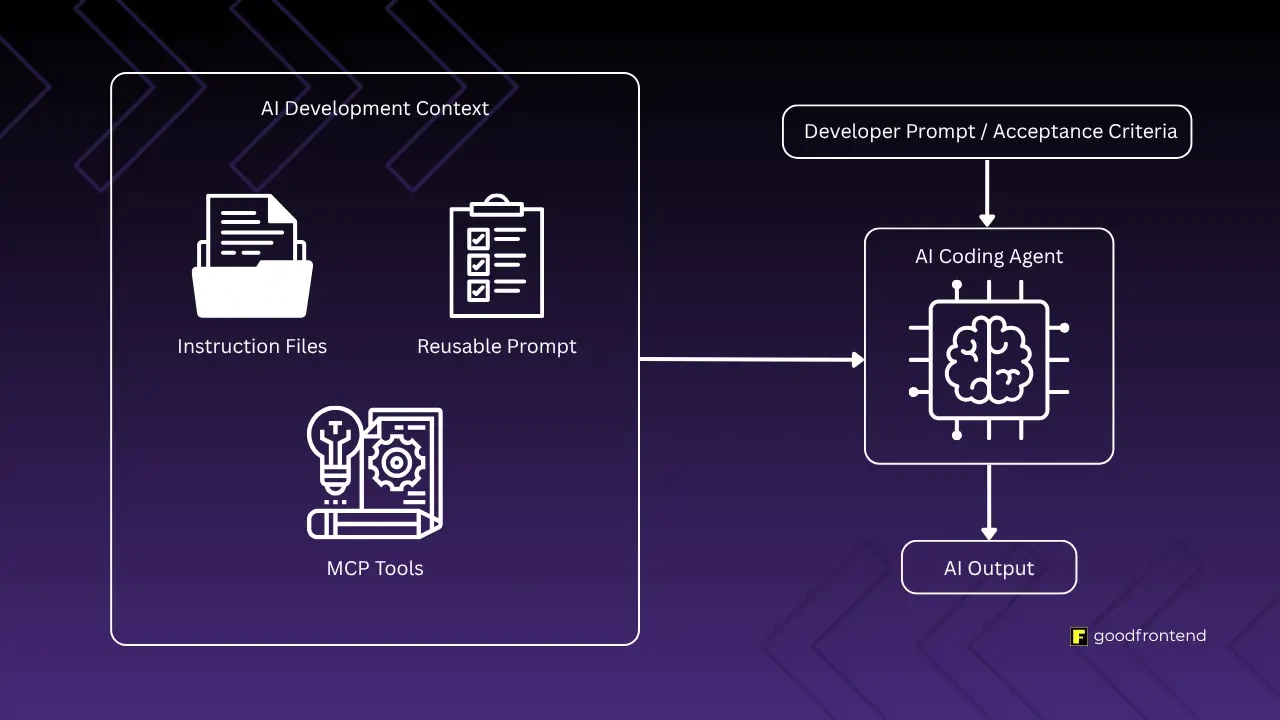

They say that specification is the new code. It is the scaffolding of a product in the age of “vibe coding” and AI coding agents. Written specification tells you the value, intent, and vision of a product-–things that an AI model typically wouldn't know or wouldn't be able to guess. Provided with the right context, an AI coding agent should be able to produce more desirable outputs. Fortunately, there are various systems already in place for providing AI models extra context alongside the user prompt. Here is an idea of the setup I use for enriching AI with context:

Instruction Files

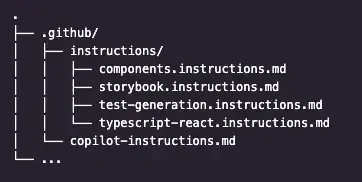

In VS Code, you can create an instruction file or multiple instruction files to provide context to Copilot. This is done by simply creating a .github/copilot-instructions.md file and writing the basic rules and guidelines Copilot should follow when generating code for your codebase. For more specific instructions, I created a few custom instruction files. It looks something like this:

I have various instruction files about specific areas of concern, and the nice thing about instruction files is that I can apply it to certain files, folders, or file patterns only. For example, typescript-react.instructions.md will only apply to **/*.ts, **/*.tsx files. Copilot automatically reads the contents of this instruction file when dealing with .ts and .tsx files.

Model Context Protocol (MCP)

The Model Context Protocol (MCP) is a technique or strategy that was created to provide a standardized way to give AI models context from external data sources. It works similarly to APIs where there is a client and server relationship between two entities. The client, in this case being the AI model, can have access to tools that are available via the MCP server. One advantage of MCP is that it is model- and tool-agnostic, so any AI model or AI coding agent that supports MCP servers will be able to use this strategy.

The two I typically use are Context7 MCP, which provides up-to-date documentation for various tools and frameworks, and Sequential Thinking MCP, which helps the AI model break down complex problems into manageable steps. When I'm working with a new library, Context7 can fetch the latest API documentation, ensuring the AI doesn't suggest deprecated methods or outdated patterns. Apps and tools that serve as a centralized knowledge base like Notion and Atlassian (Confluence) also have released their own MCP servers so that AI coding agents can access the user’s knowledge base.

In VS Code, MCP servers can be added to the workspace settings in .vscode/mcp.json for project-specific configuration so you can share your MCP server setup with the team.

Prompt Files

I have about 7 MCP servers added to my workspace, and each server offers an abundance of tools that the AI coding agent can utilize. However, VS Code only allows up to a certain number of tools per prompt. If I wanted my AI coding agent to read an issue from Linear and implement it, it would need at least the get_issue tool from the Linear MCP to fetch the issue details and editFiles from the built-in VS Code MCP to be able to edit the files in my codebase. When I switch gears and instead ask my AI coding agent to resolve Codacy issues found in my pull request, I need tools from the Codacy MCP. It can be cumbersome to keep updating the list of tools available to the AI at any given prompt. This is where prompt files shine.

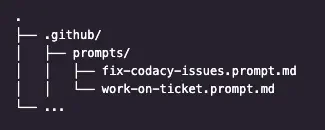

Prompt files are .prompt.md files that live under the .github/prompts folder. They serve as rerunnable prompts that you can refer to in the Copilot chat with slash (/) commands. You can specify the agent used and the tools that the AI is allowed to use when running the prompt. An example setup would be:

In fix-codacy-issues, the tools Copilot is allowed to use are those that are related to Codacy, file editing, and GitHub for updating the relevant pull request. Whereas work-on-ticket would include Linear tools, file editing tools, and GitHub tools for creating a pull request for the Linear issue. In this way, I was able to specify which tools the AI is allowed to use for commonly used prompts that are part of my usual workflow.

The AI teammate

As a software engineer, I have always worked with multiple people in a team and every project I’ve been on has always been a collaborative one. My own AI-assisted development journey made me ponder about AI-assisted development in the context of engineering teams and what that system might look like. It is starting to become more and more apparent that the role of the modern software engineer is to be the context engineer and quality gatekeeper to their AI coding agent: create a system for context management and use your expertise to evaluate the output enough to take ownership of the code that an AI produces. After all, we cannot hold machines accountable for the code that we ship.

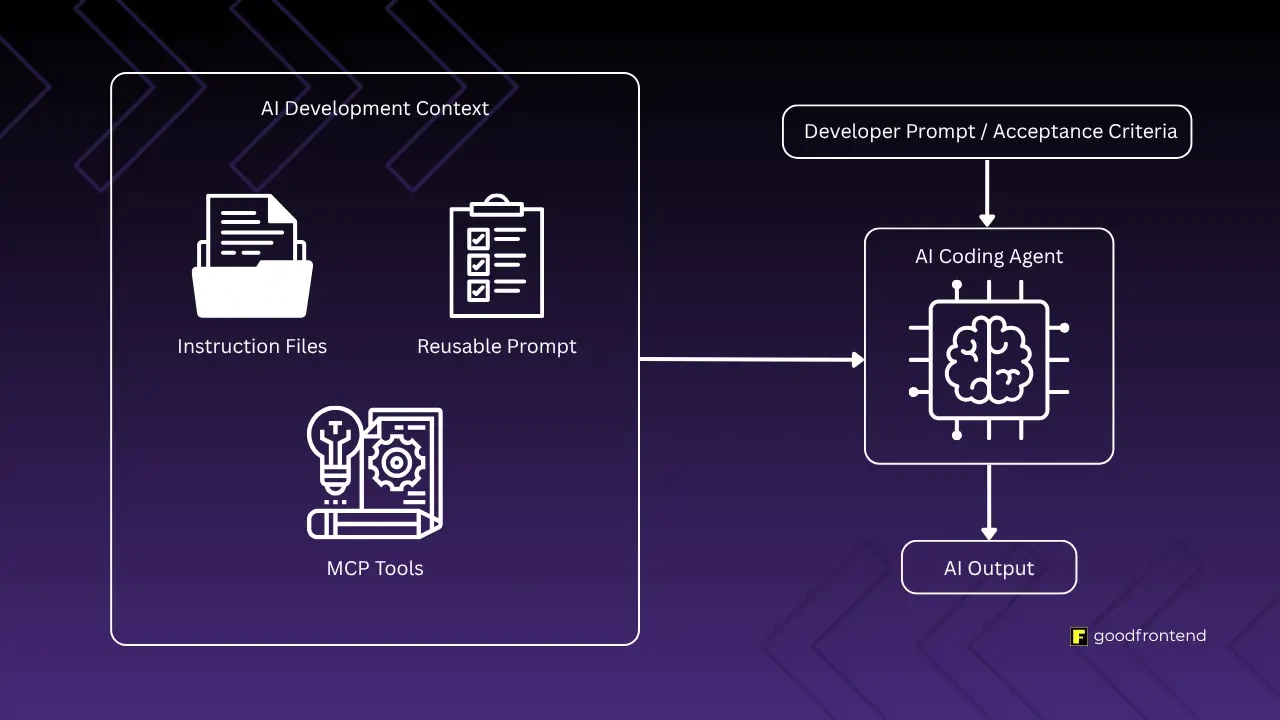

AI Development Context

When working in a team, the project should have a centralized set of documents that teaches the agent about coding guidelines, context about the codebase, internal coding standards apart from the usual best practices, common patterns and rules, contributing guidelines, and other areas of development that are useful as context to the AI coding agent. There should also be a centralized list of MCP servers that the AI coding agent can be configured with and, optionally, a set of reusable prompts commonly used in the team’s workflow. In a more general sense, I like to think about this system as the AI Development Context (ADC).

I have outlined a more concrete example of the ADC in action in the previous section, and you can also view a sample repository with the basic ADC setup that I am working with at rngueco/adc-sample. In this section I will attempt to describe ADC in a collaborative context taking into account the possibility that team members may use different development tools.

ADC in Workflow

The AI Development Context should be like any other piece of documentation that a software engineer produces. A basic outline of the context it contains would look something like this:

- Overview about the project

- Project stack (tech stack, frameworks and libraries)

- Coding guidelines

- Agent task scope

- Contributing guidelines (PRs and commits conventions)

- Things to avoid (what not to do)

- List of MCP servers

It should be a result of collaborative effort from the team, and it should be updated regularly based on feedback from the team with regards to their interaction with an AI coding agent adhering to the ADC. When updating the ADC, the team should be able to answer questions like:

- What should we explicitly tell the AI not to do?

- What unspoken rules and patterns should we make visible to the AI?

- What other context is missing from the ADC that causes the AI to fail?

This can be done in team retrospectives alongside regular retrospectives concerns.

ADC Outside of VS Code and Copilot

Developing an AI Development Context system within a team is very much doable when everyone uses the same tools. I was able to create the foundations of an ADC in VS Code with Copilot instruction files, prompt files, and workspace settings for the MCP server list. However, not every developer will prefer to use VS Code or Copilot. There are ways to provide instructions, rules, or guidelines for many other tools like Cursor, Claude Code, or Cline. Problem is: all these tools have differing file conventions and systems. It will be difficult to create one source of truth.

Emerging tools like ContextHub (CTX) aim to bridge this gap: you only need to provide a .ai-context.md file as the source of truth, and CTX will generate symlinks between the configuration files and .ai-context.md. However, this can only generate a single configuration file as the overall coding guideline for an AI agent. The need for specificity and customisation is not met. Moreover, as more tools emerge, we find that we will be reliant on CTX updates to support new configuration file conventions.

The big ask: As newer AI coding agents emerge from the market as an “AI coding teammate”, the need for a standardized convention for configuration files becomes greater. In order to truly integrate AI into our workflow, we need a standardized system that will allow us to empower it as we would any teammate.

AI in Code Reviews

Asking your AI for code review is easier than ever. Platforms like GitHub and GitLab have released paid features for GitHub Copilot and GitLab Duo, respectively, to be able to review you and your team’s pull requests and merge requests. It is as simple as assigning them as a reviewer. The team can also collaborate on custom code review instructions for these AI agents. For GitHub Copilot, simply create an instruction file for code review and set the project’s workspace settings github.copilot.chat.reviewSelection.instructions to the path of the instruction file. For GitLab Duo, custom instructions can be authored in the .gitlab/duo/mr-review-instructions.yaml file.

It should also be considered as part of each individual’s workflow to ask their AI coding agent to review the changes before opening a pull request. More often than not small issues like typos, naming inconsistencies, or pattern mismatches will be caught by the AI.

Conclusion

My journey with AI coding agents has revealed that the most successful implementations occur when we treat AI not as a replacement for human expertise, but as a sophisticated teammate that requires careful context management and collaborative integration.

AI as a coding teammate becomes more and more possible and friction-less each day. However, there are some hurdles that we need to overcome to arrive at a stable system that will work for most teams. The concept of AI Development Context (ADC) that I've outlined here represents a practical step toward this future. By creating standardized documentation that bridges the gap between human domain knowledge and AI capabilities, we can harness the efficiency of AI while maintaining the quality and intentionality that human expertise provides.

Looking ahead, the teams that will be most successful with AI-assisted development are those that invest in systematic context engineering, treat AI integration as a collaborative process requiring regular refinement, and maintain clear ownership and accountability structures. As AI handles more of the syntactical heavy lifting, the software developer's role is evolving to emphasize specification writing and system design, which are skills that require deep domain understanding and strategic thinking that AI cannot replicate. The future isn't about AI replacing developers—it's about developers and AI working together more effectively than either could alone.

About the Contributor

Riyana Elizabeth GuecoView ProfileFrontend Engineer

Riyana Elizabeth GuecoView ProfileFrontend Engineer

Discuss this topic with an expert.

Related Topics

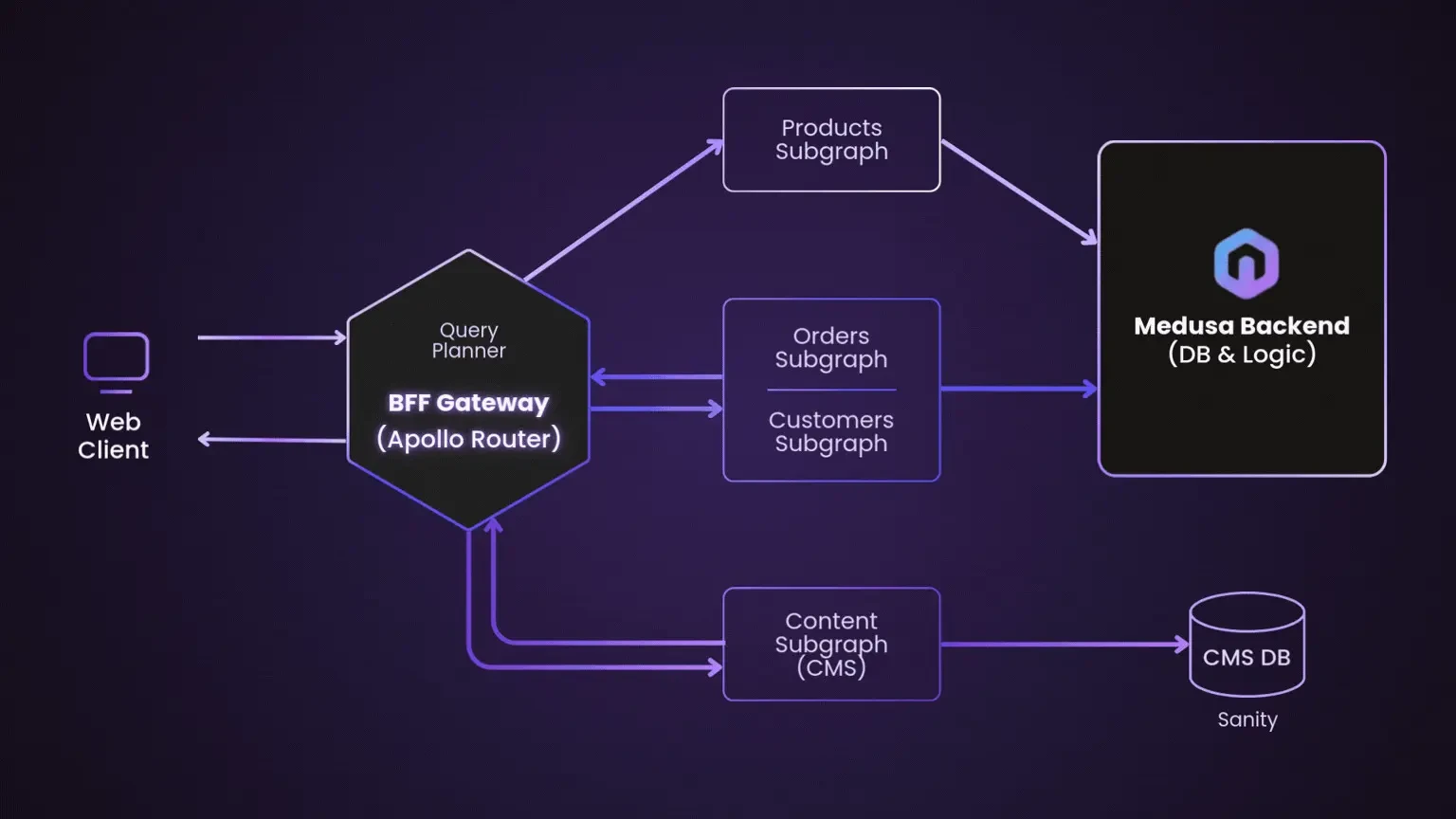

Evolving Storefront Architecture with Backend-For-Frontend (BFF)As our clients expanded into new markets and channels, maintaining separate integrations for each API became costly and time-consuming. Our platform’s adoption of GraphQL changed that — creating a single data layer that unifies product, pricing, and content APIs into one flexible interface.

Level Up Shopping: How Gamification Is Transforming E-CommerceHow AI and Playful Design Are Redefining Customer Loyalty

Invisible UX: When the best interface is no interfaceTurns out less really is more.