Bloomreach: How automating tasks can help you be efficient & consistent

The TLDR:

“Automate when you need to, not when you want to.”

Why: Efficiency, consistency, & convenience.

When: Address repetitive task, reduce human error, & make things easier

Whenever the question of automating comes up during our development process we have the misconception that it’s always good to automate everything, but that is not always the case...

The road travelled

This is the story of how we automated Bloomreach component & document CRUD operations that subsequently aided in the migration of those document & components to different environments consistently. For context, Bloomreach has a web UI & Swagger API to create, update, and delete components & documents. Since this was our first encounter with Bloomreach CMS, for the most part, interacting with the web UI and swagger API was okay especially since we were still fiddling with our workflow & process. Over time as we became more familiar with the Bloomreach system, we found ourselves settling with a certain workflow of creating components only as you need them, modifying existing ones if it fits the use case, and gradually using the API more instead of the Web UI. When we were familiar with Bloomreach CMS we proceeded with development. Our goal was to have consistent components & documents across channels in different environments from smoke, to test, up to production. The problem was that Bloomreach had no built-in migration feature, which means in order to move a component from one environment to another, you would have to manually create the component/documents again. Also, when you modified a component from one environment, you would then have to manually update the changes to the other to keep it consistent across the board. It is clear that such a limitation would increase development time by n * m * e where n is the number of components/documents you have in a project, m is the number of modifications you do during the development cycle, and e is the number of environments you have. There were a combination of two solutions we came up with to address the problem. First, to address consistency we added the component & document schema to the repo itself. This way we know that the repo would be the “source of truth” and basis for all environments, and second, was to automate the CRUD operations. This would reduce human error & save time repetitively creating the same component/document. As the project came to a close, we did a retrospective of what we had done so far, and I am happy to have implemented automation for CRUD operations. It is clear to me what the impact of a properly implemented automation has had on the project. There were a lot of lessons learned, and I don’t think we would have had an easy time making things consistent, nor as efficient as we wanted to be, if we did not implement those CRUD automations.

The lessons learned

When & Why you should automate

Whenever the question of automating comes up during our development process we have the misconception that it’s always good to automate everything, but that is not always the case. There are things that will only be done once during the development life cycle and it will never be touched again. For things like this automation would be a waste of your time. We should always keep in mind the KISS (Keep it simple stupid) & YAGNI (You ain’t gonna need it) principles. If we follow these basic principles, whenever we create automation scripts, we will surely know that it will be useful and serve its purpose as you or your team progresses with the project development. To paraphrase the famous line from Jurassic Park.

“Your scientists were so preoccupied with whether or not they could, they didn’t stop to think if they should.” -Dr. Ian Malcom

To elaborate, it means automation should help you and the team during the development process to address either or all of the following things: efficiency, consistency, and convenience.

Efficiency - this just means you automate repetitive tasks that you do during your development process. This saves time to do other more important things.

Consistency - this is when you automate so that you reduce human error. This means that you avoid future problems debugging when something was forgotten or misimplemented. One of the most common use cases would be for migration.

Convenience - the reason this is included is not that it has a direct impact you can measure like the first two reasons, but rather it has an indirect impact on you and your team. Automation for the sake of convenience relieves you of “some” stress/hassle due to ease of use, and having developers in tip-top shape all throughout the development cycle is always good since we know that people who are stress-free and relaxed always perform better than those who are. The benefits of automating for convenience is less obvious, but it’s there, it’s something you can FEEL rather than MEASURE.

The reasons above are why I think you should automate. However, I think “to learn” is always a valid reason enough to do anything or pursue any endeavour, but this is more my personal motivation and philosophy since different companies have different policies regarding learning a skill on the job. You can always take the time yourself to learn how to automate stuff before you are faced with a situation or need to do so. While I believe any decent developer can always learn on the spot, you will always perform better when you’re familiar with whatever it is you're attempting to do.

Unit Test

Writing unit tests is always a good practice. If you are having problems unit testing your automation, then it’s a sign that it might be too tightly coupled, and you should consider refactoring your code. Especially when you're automating a repetitive task, you will always want to make sure that your automation is behaving as intended, otherwise, it will end up costing you more time than it will save. When you automate something, you should always have the confidence that it is doing what it’s supposed to, and Unit tests give you that confidence.

The implementation

Handling API requests using Axios

In order to implement automation of API requests, I simply used Axios to create an instance that has its parameters based on Environment variables. The purpose of this was that you were able to point your Axios instance to a different API environment by just changing the ENV variables without touching your codebase. This is relevant as it follows the Open Closed principle in SOLID principles, making this part of your automation testable and flexible.

Execute CRUD file operations using Nodejs

Implementing CRUD file operations was more straightforward as it was a matter of using the FS library in Nodejs together with async/await to write the data into a file passed from the API request. One important thing I realized here is to always give feedback by writing to the console to make the user aware of either the success or failure of the method and what caused it. In this manner, if the automation was to ever fail, I would be more informed of what steps to take to address the issue.

One of the final touches I added was to format the text before writing it into the file by calling the prettier format function, while it served no functional benefit, it was just cleaner.

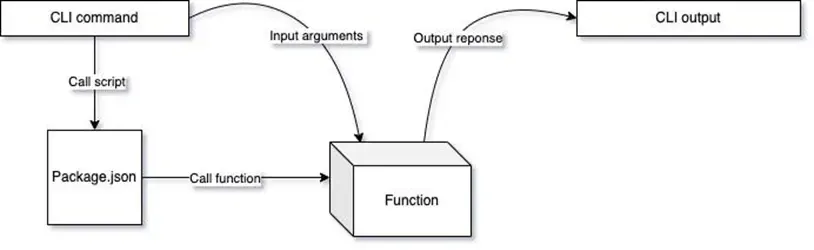

Adding CLI commands using Nodejs

For everything to be executed through CLI, I then added scripts that were referenced in the package JSON file. Using the Nodejs library to read argument variables through Nodejs process, the concept was fairly simple, read the arguments from the CLI then pass them on as arguments to the function that will be called/executed and let the function handle the rest.

Tying it all together

The entire automation process would then be as follows, the user would enter their CLI command that calls a certain script based on what command it was, then the script, in turn, would read & handle the nodejs process argument variables and call the related function making sure to pass on the argument variables (if there are any). The function would then be the one responsible for calling the API request and calling the CRUD file operations depending on the response.

In the end, we would simply navigate to the project, possibly make changes to the schema in the repo, run a CLI command and changes would be made. We had commands that were as simple as “yarn component:push” and it would push all changes to the Bloomreach CMS. It just works!

The implementation

Handling API request using Axios

In order to implement automation of API requests, I simply used Axios to create an instance that has its parameters based on Environment variables. The purpose of this was that you were able to point your axios instance to a different API environment by just changing the ENV variables without touching your code base. This is relevant as it follows the Open Closed principle in SOLID principles, making this part of your automation testable and flexible.

Execute CRUD file operations using Nodejs

Implementing CRUD file operations was more straightforward as it was a matter of using the FS library in Nodejs together with async/await to write the data into a file passed from the API request. One important thing I realized here is to always give feedback by writing to the console to make the user aware of either the success or failure of the method and what caused it. In this manner if the automation was to ever fail, I would be more informed of what steps to take to address the issue.

One of the final touches I added was to format the text before writing it into the file by calling the prettier format function, while it served no functional benefit, it was just cleaner.

Adding CLI commands using Nodejs

For everything to be executed through CLI, I then added scripts that were referenced in the package JSON file. Using the Nodejs library to read argument variables through Nodejs process, the concept was fairly simple, read the arguments from the CLI then pass it on as arguments to the function that will be called/executed and let the function handle the rest.

Tying it all together

The entire automation process would then be as follows, user would enter their CLI command that calls a certain script based on what command it was, then the script in turn would read & handle the nodejs process argument variables and call the related function making sure to pass on the argument variables (if there are any). The function would then be the one responsible for calling the API request and calling the CRUD file operations depending on the response.

In the end, we would simply navigate to the project, possibly make changes to the schema in the repo, run a CLI command and changes would be made. We had commands that were as simple as “rushx component:push” and it would push all changes to the Bloomreach CMS. It just works!

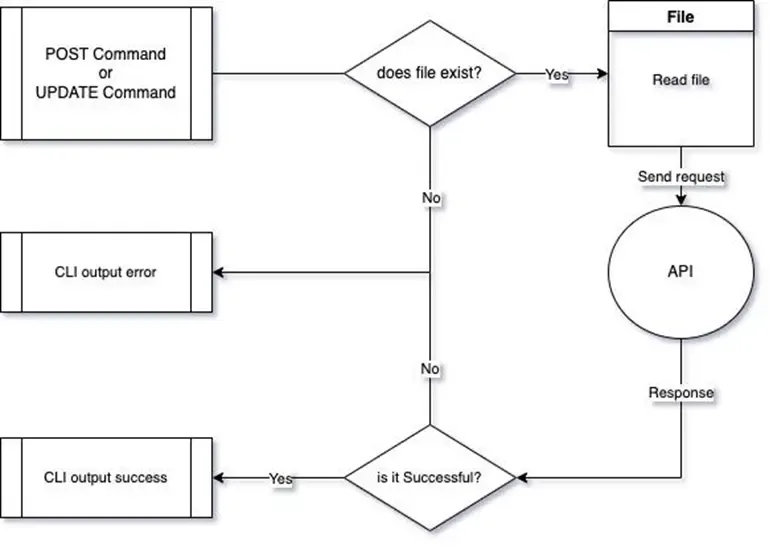

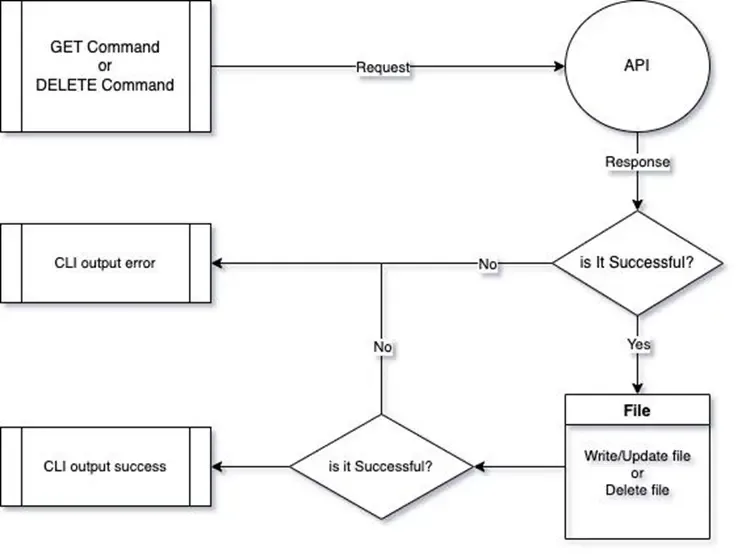

The implementation visually

The push/pull commands are simply an iterative implementation of these basic commands.

Post & Update command

Get & Delete command

Reference

About the Contributor

Kenneth De LeonView ProfileCadet Software Engineer

Kenneth De LeonView ProfileCadet Software Engineer

Discuss this topic with an expert.

Related Topics

Adyen Web Components: Adyen config, localization, and integrationAdyen integration and configuration: A setup for success.

Amplience Hierarchies: Empowering Modern Content ManagementSculpting Digital Experiences with Amplience Hierarchies

Shooting mails through mailgun 🔫Working with email templates using handlebarsJS and Mailgun